Each of these quantities has a precise definition and is informative about some aspect of the current state of the physical world. For example, the mass of an object can tell you how much work is necessary to lift it at a certain height. The outside air temperature determines the kind of clothes you would wear when you go out. And so on.

Probabilities are also quantities that measure something — they have a very precise and unambiguous mathematical definition. But still, they don’t relate to things in the physical world as straightforwardly and as intuitively as measures like mass and length.

What does it mean to say that there is a probability of 47% that it will rain during a particular day? Or that the probability of winning the lottery is 0.00000032%? How would knowing the probability of an event be useful to you in your decisions that depend on that event?

This post’s aim is to explore the meaning of probabilities. More precisely, I’m going to focus on the question: how do probabilities, as a mathematical concept, relate to the physical world?

Table of Contents

Introduction

There are 4 main definitions of probabilities which answer the last question. These definitions view probabilities as either:

- Long-term frequencies

- Physical tendencies/propensities

- Degrees of belief

- Degrees of logical support

These aren’t really competing definitions because each of them focuses on a different aspect of the general concept. What they do have in common is that they all respect the mathematical definition of probability based on the 3 axioms suggested by the Russian mathematician Andrey Kolmogorov in the 20th century.

I introduced these axioms in the first section of my post about sample spaces but you don’t necessarily have to be familiar with them to follow the rest of this post. What you should know, however, is that probabilities are restricted to be numbers between 0 and 1 (or 0% and 100%, which is the same thing). This is one of the important differences between them and the other measures I listed in the beginning.

Before I go into details about the 4 definitions, I want to introduce the so-called classical definition of probability which will give some context for the emergence of the others.

The classical definition of probability

Important names:

The first serious attempt to define probability was in the 17th century and is now known as the classical definition. It preceded Kolmogorov’s (much more) rigorous mathematical definition by 3 centuries.

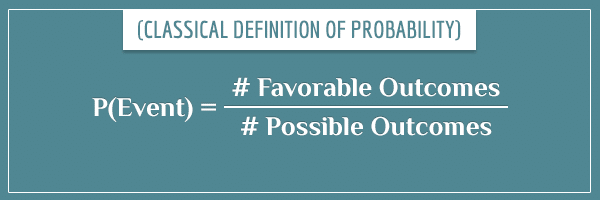

According to this early definition, you first need to conceptually break down the possibility space (which is another name for the sample space) into equally likely and mutually exclusive outcomes. Then, the probability of an event is defined as the number of outcomes favorable to the event, divided by the total number of possible outcomes.

What does favorable mean in this context?

An example

You roll a fair die and want to calculate the probability of rolling an odd number. Because the die is fair, it’s equally likely to end up with any of its 6 sides facing up. Breaking down the possibility space to these 6 outcomes satisfies the requirement of the definition:

- They cover all possibilities, assuming the die can’t remain stable on an edge or a vertex.

- All outcomes are equally likely.

- The outcomes are mutually exclusive (you can’t get two numbers as the outcome of a single roll).

The outcomes that are favorable to the event “roll an odd number” are 1, 3, and 5. This is 3 out of the 6 outcomes, so the probability of rolling an odd number is 3/6 (or 0.5).

Breaking down the possibility space

There are different ways to break down the possibility space, all of which satisfy the definition.

For example, instead of breaking down a die roll into 6 possible outcomes, you can break it down to 2 or 3:

- Odd vs. even number

- Less than 4 vs. greater than or equal to 4

- {1, 2}, {3, 4}, {5, 6}

The level at which you break down the possibility space depends on the type of the event. The important thing to consider is if your event (and only your event) can be covered completely by a subset of your possibility space.

The first breakdown (odd vs. even) would still work for calculating the probability of rolling an odd number. But the other two wouldn’t because the possible outcomes are only partly consistent with the event. So, neither would be completely favorable or unfavorable.

The principle of indifference

Another thing to consider is how to determine if individual outcomes of a breakdown are really equally likely to occur.

This is decided by the so-called principle of indifference. This principle is at the heart of the classical definition but it’s also very important for some of the other ones. It states that:

- Two outcomes should have the same probability if they’re symmetric with respect to the factors that cause them.

In the die rolling example, all 6 outcomes are symmetric because the only difference between them is in the side labels. And labels don’t physically affect the process in any way. So, any argument you can give for why a particular outcome is more likely than the others can also be used to argue for any of the other outcomes.

The classical definition isn’t exactly wrong. Actually, it is completely consistent with the mathematical definition, but it is limited. It’s only defined for finite sample spaces that can be conceptually broken down to equally likely outcomes.

Probability as long-term frequency

Important names:

According to this definition, the probability of an event is equal to the long-term frequency of the event’s occurrence when the same process is repeated many times.

Under this definition, the probability of rolling an odd number is 0.5 because rolling the die many times leads to roughly half of the rolls being odd.

This definition is different from the classical in two major ways:

- It doesn’t refer to the principle of indifference. More generally, probabilities of single events aren’t determined in advance by conceptually analyzing the sample space.

- Probabilities are not defined for single trials. This is a very important distinction that separates this definition from the others. There is no probability of rolling an odd number in a single roll. The true probability is equal to the percentage of “odd number” outcomes from a hypothetical series of infinite number of rolls.

A consequence of the second point is that probabilities only make sense when assigned to repeatable events:

- Rolling a die

- Flipping a coin

- Drawing a card from a deck

- Measuring the position of an electron in an atom

- Etc.

With this definition, you don’t calculate probabilities of particular hypotheses. Each hypothesis is either true or false and probabilities don’t quantify any uncertainty.

This is a counter-intuitive aspect of this definition that many people struggle with because we’re all used to saying things like “it’s unlikely that X is true”, implying that the probability for X to be true is low. But these kinds of statements are meaningless if you use the long-term frequency definition.

Probability as propensity

Important names:

Under this definition, probability is a physical propensity/tendency of certain events to occur under particular conditions. For example, a coin lands heads or tails with a propensity of 0.5 (this isn’t quite true because regular coins do land on their edge once in a few thousand flips). A fair die has a propensity of 1/6 to land with either side facing up. You get the idea.

The law of large numbers

This definition of probability is somewhat complementary to the frequentist one. It specifies the source of the long-term frequency of occurrence of certain events. Namely, the physical properties of the objects involved in the process.

For that, it relies on the law of large numbers. This law is a proven mathematical theorem which links probabilities to frequencies. The law states that:

- As you continue to repeat the same process, the relative frequency of occurrence of a particular outcome will get closer and closer to that outcome’s probability.

For example, if you flip a fair coin 10 times, it’s not that unlikely to get 7 heads, instead of the “expected” 5. In this case, the relative frequency of heads would be 7/10, or 0.7. But if you flip the same coin 1000 times, the relative frequency of heads will be much closer to 0.5. What the law of large numbers says is that, as you increase the number of flips, the relative frequency of heads will get closer and closer to 0.5.

Relation to other definitions

The advantage this definition has over the frequentist one is that here you can talk about probabilities of single events and not just long-term frequencies.

The propensity definition also explains the principle of indifference with the physical properties of objects. For example, a die is not biased to land on any particular side precisely because it is a perfectly symmetric cube, has a uniformly distributed density, and so on (of course, no real die is really that perfect).

Probability as degree of belief

Important names:

According to this view, probabilities measure degrees of belief. These can be beliefs about the occurrence of an event, the truth of a hypothesis, or the truth of any random fact. In other words, probabilities represent how certain you are about the truth of statements. These statements can refer to the past, the present, or the future.

A probability of 1 represents the certain belief that something is true and a probability of 0 represents the certain belief that something is false. Anything in between implies some uncertainty about the truth of the event/hypothesis.

Probabilities should reflect your knowledge and experience. The principle of indifference is also used here to assign probabilities to events when you know nothing about them or when you have no reason to believe any event is more likely than the others.

With this definition, you can still talk about probabilities of single events, just like with the propensity definition. In fact, this is the main advantage of thinking about probabilities as degrees of belief — you can attach probabilities to virtually any statement. Unlike the previous two definitions, here there is no requirement for repeatability of the events either.

Updating probabilities

Probabilities are updated using Bayes’ theorem, where your initial belief is your prior probability for an event, which can be updated into a posterior probability with new information. If this terminology is new to you, I encourage you to take a look at the post I linked to, as well as this one, where I explore the intuition behind Bayes’ theorem.

The last point is the main difference between this definition and the previous ones. Proponents of the frequentist and propensity definitions do not update probabilities with Bayes’ theorem, but from direct physical observation and maybe from analyzing the physical properties of the objects involved in the process which generates probabilistic outcomes.

Logical probability

This definition is actually very similar to the previous one. The main difference is that, instead of degrees of belief, here you talk about the degree of logical support for a particular hypothesis. In this view, probabilities are a generalization of classical logic.

In the most general sense, a probability is a number attached to a statement. That number specifies how likely it is that the statement is true. Examples of such statements are:

- A coin will land on its “tails” side.

- It will rain tomorrow.

- Your girlfriend is pregnant.

- The cat trashed the house.

Each of these statements is true with some probability. If it’s 0, the statement is definitely false, and if it’s 1, the statement is definitely true. So far, this is really just good old logic.

What logical probabilities introduce is a way to handle uncertainty. If a probability is any number other than 0 or 1, then there is some level of uncertainty about the truth of the statement. The uncertainty grows as the probability gets further away from 0 and 1 (and closer to 0.5). It’s greatest when the probability is exactly 0.5 because then the evidence is equally undecided about the truth of the statement.

With this definition, you still update probabilities with Bayes’ theorem. The difference is that you are more constrained in your initial assignment of priors. You can’t appeal to vague things like your gut feeling. However, there’s still some disagreement among theoreticians on this point.

Summary

In the beginning I started with the question:

- What do probabilities measure?

One way to answer this question is by replacing the word probability with a synonym whose meaning is more intuitive. The 4 popular synonyms of probability I introduced in this post are:

- Long-term frequencies

- Physical tendencies/propensities

- Degrees of belief

- Degrees of logical support

If you think about it, these different views of probability are actually complementary. They’re not rival views because they are quite interrelated.

The definitions complete each other

If you know that a physical process produces certain outcomes with certain long-term frequencies, it’s natural to think that the process has the physical propensity to produce these outcomes with that frequency. Then it’s also natural to adjust your degrees of belief (expectations) for each outcome, so they match that propensity. Or you can go the other way around and form your expectations for the frequency of occurrence of an outcome (by appealing to the law of large numbers) after a logical analysis of the sample space.

When I give examples of probabilities in my posts, I implicitly assume one or more of these definitions. It’s more natural to think about the probabilities of coin flips and die rolls as long-term frequencies or physical propensities. But when talking about the probability of rain or the probability of a particular hypothesis, it’s easier to assume the degree of belief/logical support definitions.

I usually don’t clarify which definition I’m using. I simply view probabilities as more general measures that satisfy Kolmogorov’s axioms. And I would personally encourage you to do the same.

Nevertheless, knowing these different philosophical definitions is helpful in getting an intuitive understanding of how probabilities relate to their mathematical definition and to the physical world. I hope this post gave you a better feeling of how to bridge this gap.

If you liked this post, be sure to also check out my post comparing Frequentist and Bayesian approaches to statistics.

It’s funny that you’d say that Fisher was interested in the performance of the procedure as only justification when in fact modern Fisherians blame this on N-P practitioners, claiming that Fisher did no such thing 🙂 A discussion on the topic can be seen here: https://errorstatistics.com/2014/08/17/are-p-values-error-probabilities-installment-1/

Same can be said for Pierce, he, just as Fisher, was much more behavioristic than described here, while Neyman was even more behavioristic than Pierce: https://errorstatistics.com/2016/08/16/performance-or-probativeness-e-s-pearsons-statistical-philosophy-2/

Is it possible your comment was intended for another post? 🙂

No, that’s the one, but if you find it irrelevant to the article for some reason, feel free to remove it 🙂

Could you quote the parts of the article you’re responding to? I’m not sure what you mean by “the performance of the procedure”.

Thank you so much for your insightful posts. I am currently taking biostatistics class and I find it really difficult to grasp the content and I am struggling in class. But your posts make me understand the concept almost instantly, you have a really great talent for explaining things and I really appreciate all your posts! You should write a textbook, I would definitely read it…

Hi Sherryn! Thank you very much for this feedback!

Out of curiosity, which topics from probability theory and statistics does your class cover? And which of them are you struggling with most?

Thanks for the detailed explanation which is full of fun to read.

I have seen several posts written by you on various topics related to Probability Theory.

Is there any correct sequence of these posts that should be followed by a beginner to get good understanding?

Hi, Prashant!

The general philosophy I am trying to follow when writing posts is to assume as little as possible about the readers’ background. Sometimes understanding a certain probability theory concept requires familiarity with some other concept(s). In such situations, I would first write a post about the latter, even if my original post idea was different. And when I finally write the post I originally intended, I put links to those more basic concepts when I refer to them.

For example, some time ago I started writing a post on probability distributions, but decided that it would be better if I first introduced more fundamental concepts like mean, variance, the law of large numbers, and expected value.

In other words, as a beginner, you can safely read my posts in chronological order, starting from the oldest. With each post, I am trying to paint some aspect or detail of the bigger picture regarding probability theory.

Having said that, there are a few posts that are more fundamental than others. I would suggest getting a good understanding of the following concepts which are likely to be encountered in almost any area of probability theory:

– Sample spaces

– Compound events

– Combinatorics

– The law of large numbers and expected value

– Probability distributions

Let me know if this helps.

By the way, could you tell me a little more about your mathematical background? If you are motivated to get a really deep understanding of probability theory, I could recommend reading about some more general mathematical concepts that will be very useful along the way.

Honestly having seen a lot stats.stackexchange answers, quora answers, books, etc from top researchers or whatever. I have to compliment you in the sense that you are able to explain it in the most succint, none pretentious and intuitive way that I have found. I congratulate you on that. As Einstein said once: “that all physical theories, their mathematical expressions apart ought to lend themselves to so simple a description ‘that even a child could understand them.’ ”

As a first stage most of the times, the math notation tends to overwhelm and complicate understanding.

I’m also curious what is your mathematical background?

Hi, Jose! Thank you for the positive feedback and for your interest! I am always glad to find out someone has benefited from reading an article I’ve written.

My formal background is in psychology, cognitive science, and neuroscience. My second master’s degree was very computational and throughout my education I’ve had courses on topics like calculus, differential equations, linear algebra, statistics, and machine learning.

I’ve also studied a lot of mathematics by myself. I have interest in more abstract areas like set theory, abstract algebra, and measure theory.

In fact, my motivation for having this writing style comes from my initial struggles with reading more complicated mathematical books by myself (including on probability theory). At first, it would take me a lot of time to get my head around what the authors were trying to explain. At times I would struggle with even parsing the sentences. But once I understood the points they were making, it suddenly all made sense. Then I would try to rephrase the same points in my own words. And, in the process, I would try to think of alternative and more intuitive ways of explaining the same thing.

As in, “If I were the one (and not the book) who had to explain the same thing to myself before I understood it, how would I have done it so I would understand it faster?”.

So, in a way, when writing my posts here, I mostly try to imagine the version of myself from years ago as “the reader” and explain the same concept in a better and more intuitive way.

By the way, my real interest in probability theory first came a little over 10 years ago, when I started playing poker as a hobby (and semi-professionally after that). Once I started studying it, however, I began to enjoy and get fascinated by probability theory independently of poker. And, to this day, it’s my favorite mathematical subject.

Thanks for your post. I ended up here from your Frequentist vs. Bayesian post.

I’m a grad student in statistics.